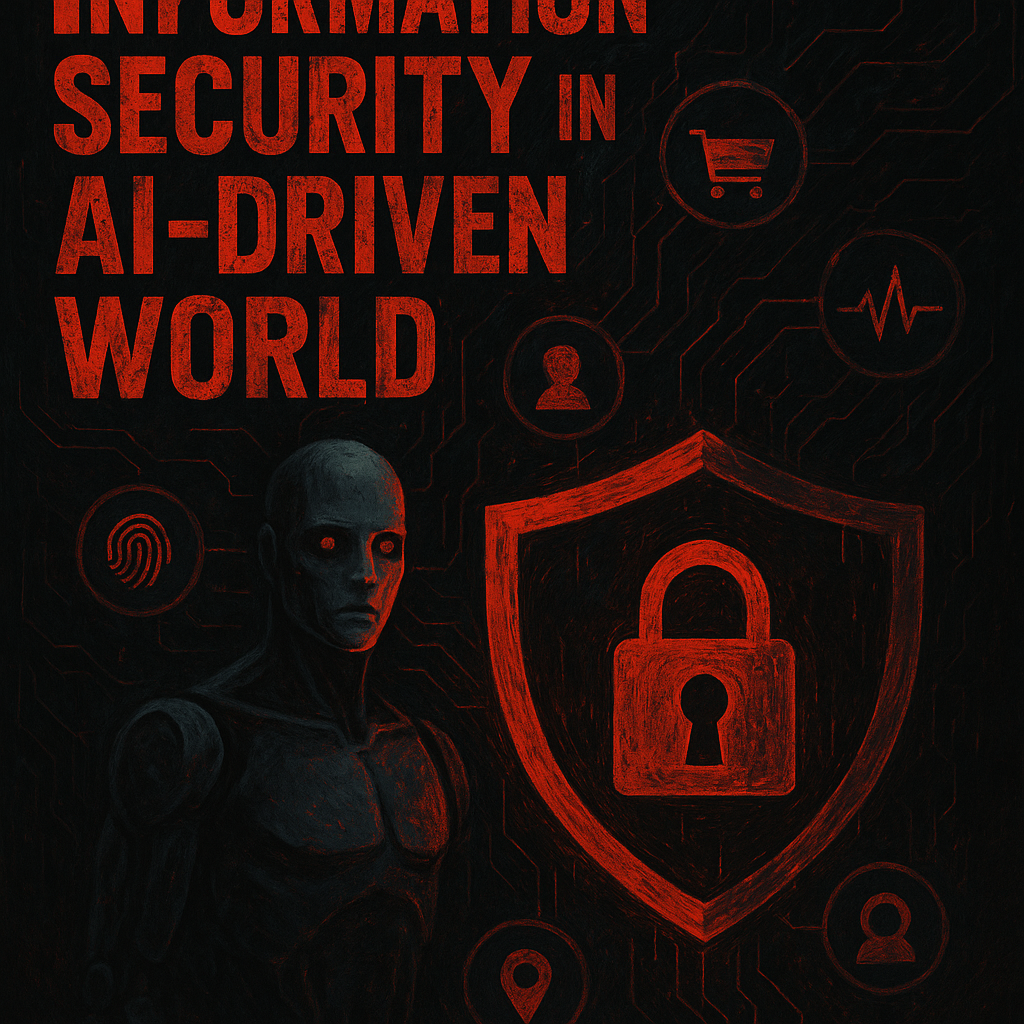

Information Protection in an AI-Driven World: Challenges, Risks, and the Street Ahead.

- Information security alludes to the proper of people to control how their individual data is collected, utilized, and shared.

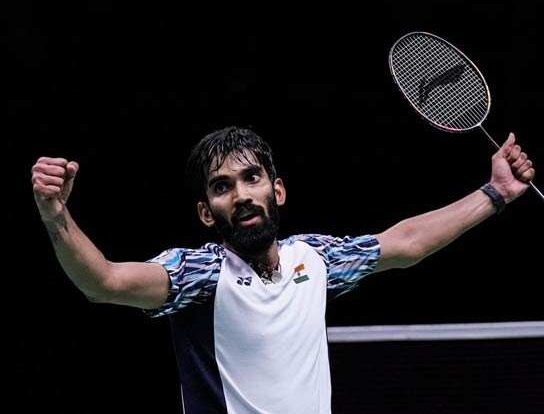

- Advanced AI-driven surveillance systems can track people in all cities, monitor social behavior, and even recognize emotions.

- Several governments have begun implementing robust data protection laws to protect users of the AI age.

~ An In-Depth Look into the Advanced Age’s Most Squeezing Concern

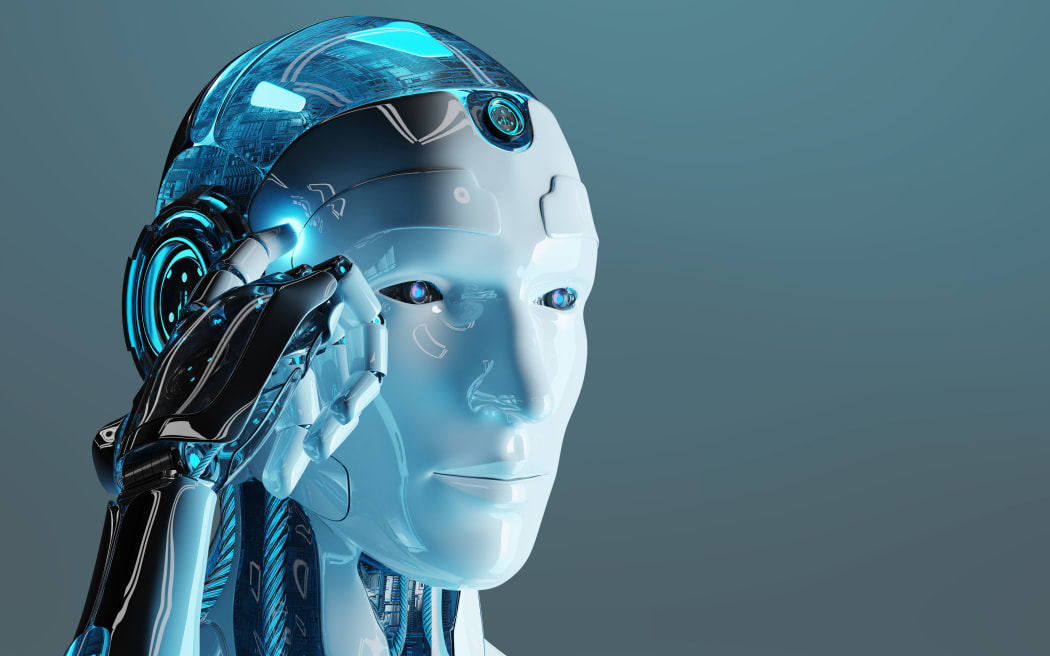

In today’s hyperconnected, algorithm-powered world, manufactured insights (AI) is now not a futuristic concept—it may be a present-day reality forming businesses, reclassifying work, and indeed foreseeing customer behavior. Be that as it may, the rise of AI has brought with it a genuine concern that influences people, businesses, and governments alike: information protection.

As AI frameworks became more progressed, they required tremendous amounts of data to prepare, learn, and work successfully. From facial recognition to personalized shopping encounters, AI calculations rely on data, and that includes exceedingly sensitive personal data. But how secure is our information in an AI-driven environment? What dangers do we confront? And how can we guarantee information protection without slowing down development?

Understanding Information Protection within the AI Period

Information security alludes to the proper of people to control how their individual data is collected, utilized, and shared. In an AI-driven world, this information regularly incorporates:

- Biometric data (face, fingerprint, iris scans)

- Behavioral data (browsing history, purchase patterns)

- Geolocation data

- Health records

- Voice recordings

- Social media activity

AI frameworks collect and handle this data through different means—smartphones, IoT gadgets, apps, and computerized administrations. Whereas this empowers comfort and personalization, it moreover raises a significant question: who claims and controls this information?

Growth risks and challenges:

- Monitoring and Loss of Anonymity

Advanced AI-driven surveillance systems can track people in all cities, monitor social behavior, and even recognize emotions. This sparked a global debate about civil liberties and the right to remain anonymous in public places.

2. Data Injury and Cyber Threats

As AI expands the surface of data collection, the risk of large-scale data damage is increasing exponentially. Hackers currently target AI systems with a rich data pool, as soon as personal data is misused from identity theft, financial fraud, and phishing attacks.

3. Beggarliness and discrimination

AI algorithms are as good as the data they are trained. Recording of preliminary data can lead to discriminatory outcomes in areas such as employment, law enforcement authorities, and credit ratings.

4. Lack of transparency

A decision-making process often referred to as a “black box” makes it difficult for users to understand how to use their data. This lack of transparency undermines trust and makes users more susceptible to abuse.

5. Fatigue approval

In many cases, users are faced with long data protection guidelines that they rarely read. This “declaration of consent” leads to an implicit data exchange. In this data exchange, you unconsciously agree to terms that may violate your privacy.

The role of law and regulations

Several governments have begun implementing robust data protection laws to protect users of the AI age. Some of the most notable regulations are:

- General Data Protection Regulation (GDPR) – European landmark laws provide for data transparency, user consent, and the right to be forgotten.

- California Consumer Privacy Act (CCPA) – Provided to California residents through personal data shared with businesses.

- India’s Digital Human Resources Data Protection Act (DPDP)– is intended to regulate data processing and enforce the uniform use of data.

These laws are essential steps, but often difficult to cope with the rapid development of AI technology.

Moral AI: An Unused Basic

To really defend information protection, a move toward moral AI improvement is vital. This incorporates:

- Privacy-by-Design: Coordination security measures from the introductory stages of AI framework development.

- Combined Learning: A strategy where AI models are prepared locally on devices instead of collecting centralized information, lessening introduction risks.

- Reasonable AI (XAI): Making straightforward AI models that can clarify their choices to clients in a human-understandable language.

- Information Minimization: Collecting as it were the information fundamental for particular errands and safely erasing it a while later.

What People Can Do

Whereas direction and moral systems are key, clients must moreover take proactive steps to ensure they possess information. Here are a few common activities:

- Perused security approaches sometimes concur with information sharing.

- Utilize privacy-focused browsers and look engines (like DuckDuckGo or Courageous).

- Empower two-factor confirmation for accounts.

- Restrain getting to apps and deny consents you don’t require.

- Maintain a strategic distance from oversharing on social media.

Mindfulness is the primary step to strengthening. The more people understand how their information is utilized, the better choices they can make in securing their digital impression.

Long-term Information Protection in AI

Looking ahead, we’re at a junction where development must adjust with duty. The long run of AI and information protection will likely include:

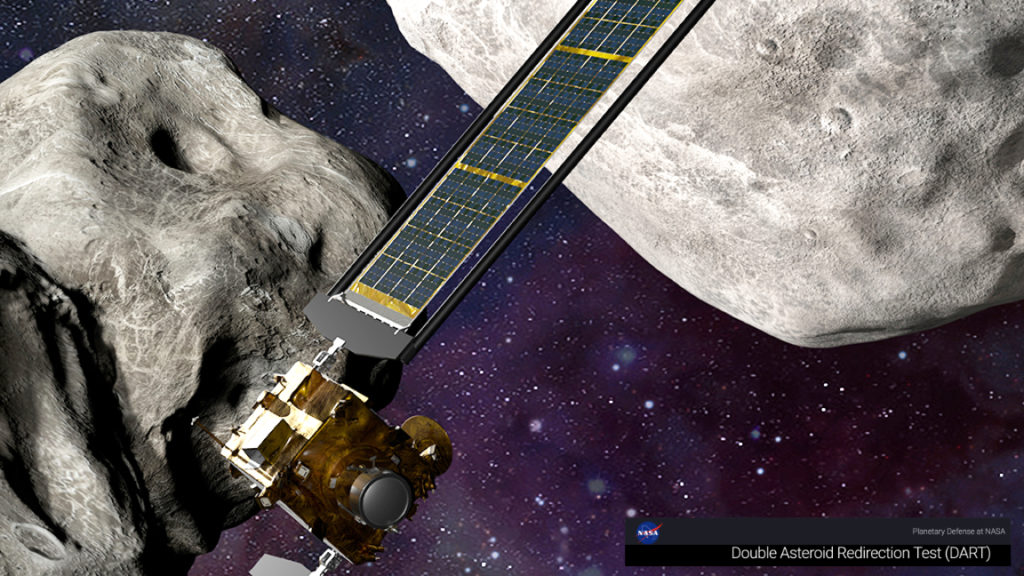

- AI Administration Systems: Worldwide collaboration to characterize guidelines, benchmarks, and conventions for dependable AI utilization.

- Privacy-enhancing Advances (PETs): Instruments like homomorphic encryption and differential protection that empower information utilization without compromising personal data.

- Consumer-Centric Stages: Stages that permit clients to possess and monetize their information safely.

Organizations, new companies, governments, and people must work together to strike a balance between AI’s potential and the moral handling of information.

Conclusion:

In an AI-driven world, information is power, and with control comes duty. Information security isn’t a fair and lawful issue; it’s a human rights issue. As we proceed to receive AI in each circle of life, guaranteeing the moral, straightforward, and secure utilization of individual information must be a global need.

The choices we make nowadays, almost AI and information protection, will shape the advanced rights of future eras. It’s time we select admirably.